This is the blog section. It has two categories: News and Releases.

Files in these directories will be listed in reverse chronological order.

This the multi-page printable view of this section. Click here to print.

This is the blog section. It has two categories: News and Releases.

Files in these directories will be listed in reverse chronological order.

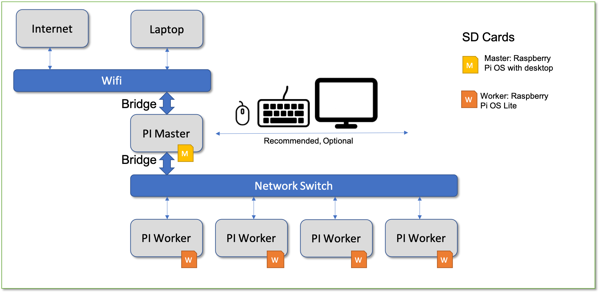

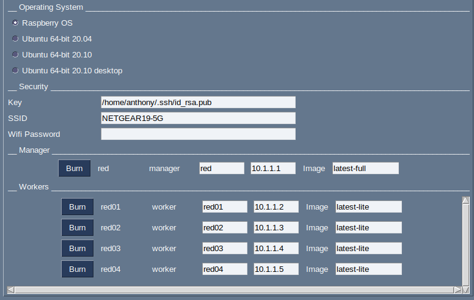

In this tutorial, we explain how to easily set up a preconfigured cluster of Pis using Raspberry PI OS while burning SD Cards from either a Windows 10 desktop/Laptop or a PI 4 using Raspberry PI OS 64 bit. The cluster is ready to boot after all cards have been burned. No other configuration is needed. You can choose to use 64-bit or 32-bit on the nodes for the cluster.

Learning Objectives

Topics covered

Info

Disclaimer

As this tool formats disks, we hold no responsibility for this tool to properly function or if you lose any data by using it. A best practice is to create a backup of your system before trying this. In cas you do not want to run it on your computer, you can always run it on a Raspberry PI. If something goes wrong, you just have to reburn the PI. We recommend a PI4 as this has a faster USB port and allows faster burn time than previous generations of PI’s.

With the release of Pi Imager

1.6,

it is possible to configure a Raspberry Pi from any operating system

while using RaspberryOS. While pi-imager only uses a limited number of

parameters, our system adds network configurations to create a

cluster. In addition to a static network configuration, it is also

possible to use the tool to burn a cluster that you add to a mesh

network. The system works while executing configurations automatically

after the first boot. Supportd opertaning systems this program include

Raspberry OS, Ubuntu 20.04, macOS, Windows 10, and (Windows 11 under

development). On http://piplanet.org we are providing additional

tutorials that are particularly designed for a specific OS.

Our tutorials are beneficial as, typically, many steps are involved in setting up a cluster. This requires either the replication of time-consuming tasks that can be automated or the knowledge of DevOps frameworks that you may not be familiar with.

We avoid this by simply burning a card for each of the PIs. No more hours wasted on setting up your initial cluster.

We developed a special command called cms burn to facilitate this,

which allows us to create preconfigured cards with the necessary

information. The features this command supports include:

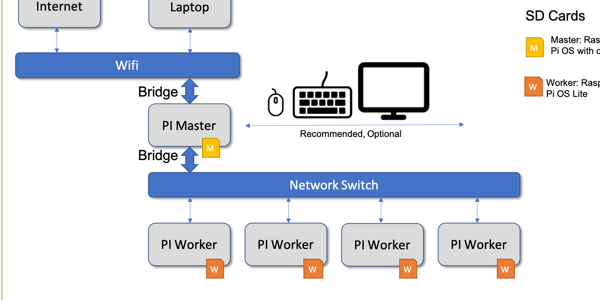

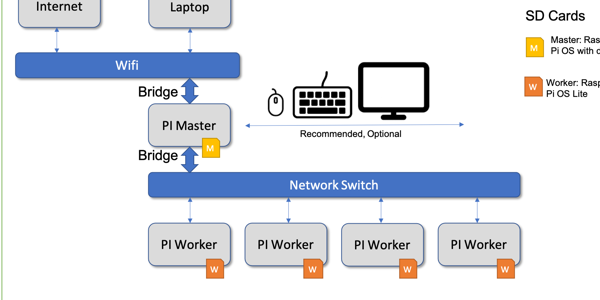

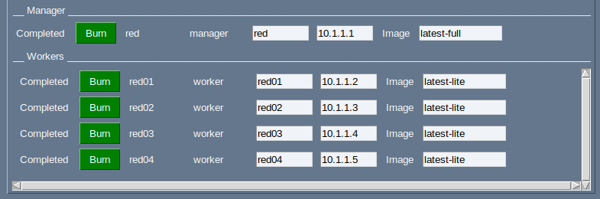

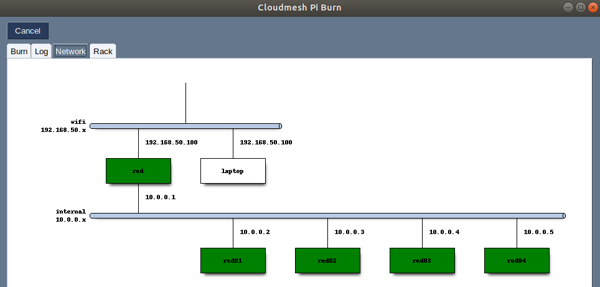

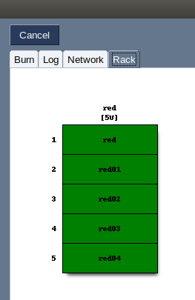

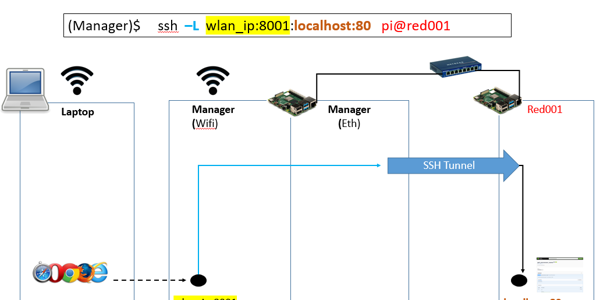

We demonstrate the usage of the cms burn command by creating a

cluster of 5 pis (1 manager, 4 workers) where we connect the manager

to the internet via Wifi and configure the workers to access the

internet through the manager via ethernet connection. This is useful

for those with restricted internet access where devices must be

registered by MAC Address or through browser login as the internet

connection is tunneled through the manager PI

In addition, we alternatively showcase how to set up the cluster in a mesh network

python3 --version > 3.9.2For parts for different pi cluster configurations, please see our links at piplanet.org

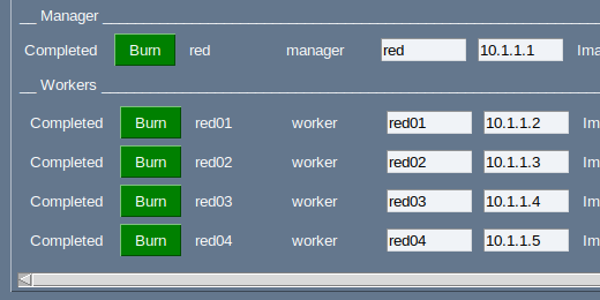

In our tutorial, we define the manager hostname to be red, while each

worker has a number in it red01, red02, red03, red04. Our tool

specifically identifies the manager node to be the one without the

number. Naturally, you can use a different name than using

red. This can also come in handy if you want to create

multiple clusters to create a distributed cluster environment for use

or development purposes.

The naming of the location for the device representing the SD Card is

different between Windows and Linux, Rasberry OS, and macOS. While on

Windows, the device is called a disk that has a number; on the other

operating systems, it is specified with a device location such as

/dev/sdX where X is typically a lower case letter.

Therea are multiple ways on how we can configure a cluster. Within this tutorial we provide two different ways dependent on what network router you have. This includes a classical network router or a mesh network router.

We provide for both the following illustrations. YOu will have to determine which network type fits for you as there will be a slight difference during the burn task that we point out later.

Cloudmesh burn runs an many different versions of Python. This includes Python either installed with conda or python.org as it can be easily installed with pip which is supported on both. We recommend typically to use the python version installed from python.org if you are unsure which to chose and you are not using conda.

First, we update pip and verify your python and pip are correct.

| |

The pip install is not yet suported!!. So please use

the Install from source installation documentation. Once we officially

release this code the install from pip can be used.

bash~~ ~~(ENV3) you@yourlaptop $ pip install cloudmesh-pi-cluster~~ ~~

If you are a developer that likes to add new features, we recommend our

source setup. We start after you have created the virtualenv with the

install of our convenient cloudmesh-installer and the creation of a

cm directory in which we download the sources

| |

This directory will now contain all source code. It will also have

installed the needed cms command.

We use ssh to easily log in to the manager and worker nodes from the

laptop/desktop. Hence we create a keypair in ~/.ssh. You can create

one as follows by accepting the default location in ~/.ssh/id_rsa

| |

Please use a unique and strong passphrase. We will use this default key to access our cluster after burning.

In case you do not always want to type in your passphrase, you can use ssh-agent in your terminal.

We are now ready to burn our cluster. Start by making sure you have the latest images to burn. Please choose the 64-bit or the 32-bit image tab to see details. For our example, we will be choosing the 64-bit image. First, we need to download it.

This command will create a cached version of the information related to the download URLs and a specifically created version tag that we will use for downloading the image. It is also possible to define different images for manager and workers, while adding them to the images list in a comma-separated parameter using the tags.

To choose a different image, you can inspect the versions and tags as well as the originating URLs with

| |

To just see the latest versions type in

| |

which returns an output similar to

+--------------------+------------+-------------+-------------+-----------------------------------------+

| Tag | Date | OS | Type | Version |

|--------------------+------------+-------------+-------------+-----------------------------------------|

| latest-lite | 2022-04-07 | raspberryos | lite | raspios_lite_armhf-2022-04-07 |

| latest-full | 2022-04-07 | raspberryos | full | raspios_full_armhf-2022-04-07 |

| latest-lite-32 | 2022-04-07 | raspberryos | lite-32 | raspios_lite_armhf-2022-04-07 |

| latest-full-32 | 2022-04-07 | raspberryos | full-32 | raspios_full_armhf-2022-04-07 |

| latest-lite-64 | 2022-04-07 | raspberryos | lite-64 | raspios_lite_arm64-2022-04-07 |

| latest-full-64 | 2022-04-07 | raspberryos | full-64 | raspios_arm64-2022-04-07 |

| latest-lite-legacy | 2022-04-07 | raspberryos | lite-legacy | raspios_oldstable_lite_armhf-2022-04-07 |

| latest-full-legacy | 2022-04-07 | raspberryos | full-legacy | raspios_oldstable_armhf-2022-04-07 |

+--------------------+------------+-------------+-------------+-----------------------------------------+

Next, plug in your first SD Card into your card writer. Check your writer’s path with the following command.

`Note we omitted some output of

cms burn infofor clarity.

Dependent on your system, this command will take a minute to complete.

In case you receive a warning, inspect it carefully. One reason could

be that the warning occurs as your reader may be too new, and we do

not have it in our database of recognized readers. As long as you see

Removable Media and GENERIC STORAGE DEVICE it will be fine.

To burn the latest 64 bit OS, use the following command. If you like

to burn other images identified by tags, you can receive them from the

command cms burn versions --refresh. The --tag can take multiple

comma-separated arguments. The tag can be specified for each SD Card

you want to specify individually. A special form is to use

–tag=latest-full-64,latest-lite-64 in which the full image is burned

on the manager and the lite burned on the workers. As mentioned

before, we will, however, just burn the lite 64-bit image on all.

Timezones can be found at https://en.wikipedia.org/wiki/List_of_tz_database_time_zones. Timezones are typically defined with forward slashes in the string identifying them. However, as we use Python forward slashes have a specific meaning in Python and would interfere with our implementation.

Therefore we use - instead of /.

Hence, when entering timezones for the --timezone parameter, please

replace forward slashes with hyphens, as shown in the example

next:

America/Indiana/Indianapolis

must be replaced with ‘-’ in the --timezone parameter:

--timezone="America-Indiana-Indianapolis

If the network name has a space in it, please use two sets of quotes

(with backslashes on Windows Git Bash):

\"'--ssid='Net Work'\". In general, we recommend not to use any spaces

in network names.

# ----------------------------------------------------------------------

# _____ _ ____ _____

# | __ \ | | / __ \ / ____|

# | |__) |__ _ ___ _ __ | |__ ___ _ __ _ __ _ _| | | | (___

# | _ // _` / __| '_ \| '_ \ / _ \ '__| '__| | | | | | |\___ \

# | | \ \ (_| \__ \ |_) | |_) | __/ | | | | |_| | |__| |____) |

# |_| \_\__,_|___/ .__/|_.__/ \___|_| |_| \__, |\____/|_____/

# | | __/ |

# |_| |___/

# ____

# | _ \

# | |_) |_ _ _ __ _ __

# | _ <| | | | '__| '_ \

# | |_) | |_| | | | | | |

# |____/ \__,_|_| |_| |_|

#

#

# ----------------------------------------------------------------------

INFO: No inventory found or forced rebuild. Building inventory with defaults.

+-------+----------------+---------+---------+--------------------+----------+------------------------+----------+-------------+----------------------------+--------+---------+-------------+-------------------+

| host | tag | cluster | service | services | ip | dns | router | locale | timezone | owners | comment | description | keyfile |

+-------+----------------+---------+---------+--------------------+----------+------------------------+----------+-------------+----------------------------+--------+---------+-------------+-------------------+

| red | latest-lite-64 | | manager | ['bridge', 'wifi'] | 10.1.1.1 | | | en_US.UTF-8 | AmericaIndianaIndianapolis | | | | ~/.ssh/id_rsa.pub |

| red01 | latest-lite-64 | | worker | | 10.1.1.2 | ['8.8.8.8', '8.8.4.4'] | 10.1.1.1 | en_US.UTF-8 | AmericaIndianaIndianapolis | | | | ~/.ssh/id_rsa.pub |

| red02 | latest-lite-64 | | worker | | 10.1.1.3 | ['8.8.8.8', '8.8.4.4'] | 10.1.1.1 | en_US.UTF-8 | AmericaIndianaIndianapolis | | | | ~/.ssh/id_rsa.pub |

| red03 | latest-lite-64 | | worker | | 10.1.1.4 | ['8.8.8.8', '8.8.4.4'] | 10.1.1.1 | en_US.UTF-8 | AmericaIndianaIndianapolis | | | | ~/.ssh/id_rsa.pub |

| red04 | latest-lite-64 | | worker | | 10.1.1.5 | ['8.8.8.8', '8.8.4.4'] | 10.1.1.1 | en_US.UTF-8 | AmericaIndianaIndianapolis | | | | ~/.ssh/id_rsa.pub |

+-------+----------------+---------+---------+--------------------+----------+------------------------+----------+-------------+----------------------------+--------+---------+-------------+-------------------+

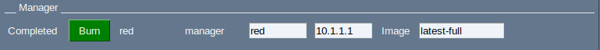

Note the

--newflag instructscms burnto build a new inventory and overwrites it if it already exists (the -f flag does the same, but we have not yet tested it). To see the contents of this file, you can use the commandcms inventory list --inventory=inventory-red.yaml

After each card is burned, cms burn raspberry will prompt you to

swap the SD card to burn the next host.

After all the cards have been burned, we can now plug them in our Raspberry PI’s and boot. Ensure that your workers and manager are connected to the same network switch via the ethernet cables. Ensure this network switch does not have internet access in itself, e.g., do not connect the switch to the internet router. We will use the manager as the sole point of internet access here. This we do deliberately to be able to disconnect all nodes from the network via the Master in case this is needed.

After you boot, we recommend waiting 2-3 minutes for the boot process to complete. You will notice that the red LED will be on and that the green LED is off. If this is not the case, please wait. If it does not work after a long while, please reboot the PI that has issues and see if it works after the reboot. Also, make sure you check your hardware and network.

One of the important issues is how the PIs are named in your network.

Some networks require that a .local be added to the hostname.

To find that out you can use on amchines where arp is available the command

| |

In case you use a mesh network, it will print out all Raspberry PI 4s in your

network and indicate if they can be found with the .local

extension to the hostname. In case you use a static network it will return

the manager node and indicate if the nodename needs to be appended with .local

or not.

cms hostBefore we start we make sure that you entered your ssh key. Naturally you need to have your ssh-agent running as discussed before.

| |

The next command depends on if you can use the .local extension or not.local network.

Next, we need to identify if you need to use the manager as a proxy machine to the

worker nodes and if your host names need to be appended with .local which is

the case for a network that uses mDNS such as on a macOS. If you do not know

these values you can try out different combinations of the

flags and use the one that succeds.

Let us assume you are not using a mesh network, then you can set up proxy jump on our laptop/desktop while adding it to the ssh config file. This will make it easier to access our workers. Use the following command to set this up:

| |

This assumes that hosts in your network are appended with .local. If this is not the case you can switch it off by using the following command:

| |

If you are on a mesh network, it is simpler to not use a proxy machine and use the followng

| |

However some mesh networks still require the addition of .local. In which case

you can use

| |

To view the modifications use the command

| |

Optionally you can add the following to the top of your

~/.ssh/config file. As you may have done this before, we do not

automatically do it, and we ask you to add it with your favorite

editor.

Host *

ServerAliveInterval 30

ServerAliveCountMax 5

This will keep the connections alive and avoids that you get disconnected.

First, verify that you can reach the manager (red).

| |

Next we want to execute a more advanced program that retrieves the

temperature of the PIs. We can use a simple cms command to verify the connection to our Pis.

For this purpose, we use our build-in temperature command that reads the

temperature values from each of the Pis.

| |

Which returns output in a table recording the temperatures

+--------+--------+-------+----------------------------+

| host | cpu | gpu | date |

|--------+--------+-------+----------------------------|

| red | 47.712 | 47.2 | 2022-04-27 19:52:56.674668 |

| red01 | 37.485 | 37.4 | 2022-04-27 19:52:57.333300 |

| red02 | 38.946 | 38.9 | 2022-04-27 19:52:57.303389 |

| red03 | 38.946 | 39.4 | 2022-04-27 19:52:57.440690 |

| red04 | 38.936 | 39.4 | 2022-04-27 19:52:57.550690 |

+--------+--------+-------+----------------------------+

By receiving this information from our devices, we have confirmed our access.

If you receive the error ERROR: not enough values to unpack (expected 2, got 0)

then delete the proxy config inside ~/.ssh/config file (either with nano,

Notepad++, or even delete the file with rm ~/.ssh/config if you do not have any

other important preexisting configs done outside the scope of this project).

Then, try the different cms host config commands individually as shown in section

7.1, until temp returns all the nodes' temperatures (instead of 0 which indicates

missing/offline node or that host cannot connect).

To set up keys on each PI so we can login from one PI to another, we

can use our create/gather/scatter/access commands. First, we need to

create a key on the workers and manager. Then we gather all keys and

scatter them on all PIs. We also set up a configuration file located at

.ssh/config on each one of the hosts. The sequence of commands is as

follows:

We describe each step in more detail next, or if you are impatient, you can skip to the next section.

Each of the nodes only has our laptop’s ssh-key in its respective

authorized_keys file. We can use the cms command to gather all

keys in our cluster and then distribute them so that each node can ssh

into each other.

We first create ssh-keys for all the nodes in our cluster.

| |

+-------+---------+--------------------------------------------------+

| host | success | stdout |

+-------+---------+--------------------------------------------------+

| red | True | ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQC99RR79UTQ |

| | | JznOPRN/FI6MqNsZ5Eirqs7JXA4UYfnMx8LVaD/ZcI6Sajr0 |

| | | 2nw2ucec8OMxtdzPrpWX5B+Hxz3GZWNKCbh2yHhMDIrf/Ohn |

| | | QGJrBx1mtAbqy4gd7qljEsL0FummdYmPdtHFBy7t2zkVp0J1 |

| | | V5YiLEbbrmX9RXQF1bJvHb4VNOOcwq47qX9h561q8qBxgQLz |

| | | F3iHmrMxmL8oma1RFVgZmjhnKMoXF+t13uZrf2R5/hVO4K6T |

| | | +PENSnjW7OX6aiIT8Ty1ga74FhXr9F5t14cofpN6QwuF2SqM |

| | | CgpVGfRSGMrLI/2pefszU2b5eeICWYePdopkslML+f+n |

| | | pi@red |

| red01 | True | ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDRN/rGGF+e |

| | | dZ9S2IWX4P26F7T2H+nsbw7CfeJ6df9oX/npYuM9BPDzcEP7 |

| | | +2jNIZtZVehJj5zHiodjAspCxjg+mByQZye1jioa3MBmgO3j |

| | | VwxCOPA7x0Wc2Dm9/QOg1kMMMlnNT2NHs+SeQMDXoUkpaLCQ |

| | | 108VQxaTclJ67USC1e/9B7OtjhsVm5kGA7Eamyd5PgtANT7G |

| | | jHERXSnGzFnbqqsZEBvCLWLSbaSq3adWR1bVvDblu11nyitE |

| | | x7YKZA9RK0A7rTBzrZa70SfG65dSbpqQFxmQYSrgiwVSBokk |

| | | 0vvk5l7NhBDrrrxWYWd9dm/SrDrInjcuDsCsjOVuccx7 |

| | | pi@red01 |

... # Ommitted some output for brevity

+-------+---------+--------------------------------------------------+

We can subsequently gather these keys into a file.

| |

And then Scatter them to the authorized_keys of our nodes.

| |

host key scatter red,red0[1-4]

+-------+---------+--------+

| host | success | stdout |

+-------+---------+--------+

| red | True | |

| red01 | True | |

| red02 | True | |

| red03 | True | |

| red04 | True | |

+-------+---------+--------+

All nodes should now have ssh access to each other.

We like to be able to login to each of the nodes in a convenient fashion, without needing

to add the host to knownhosts. To do this we have developed a command that switches off

StrictHostKeyChecking for all hosts. Before being able to issue the command, the user may

have to switch to the windows branch of cloudmesh-inventory by cd to cloudmesh-inventory

and then using git checkout windows and git pull. You invoke the command with

(ENV3) you@yourlaptop $ cms host key access red,red0[1-4]

You will see an output similar to

+-------+---------+--------+--------------------------------------------------+

| host | success | stdout | stderr |

+-------+---------+--------+--------------------------------------------------+

| red | True | | b"Warning: Permanently added 'red.local' |

| | | | (...) to the list of known hosts.\r\n" |

| red01 | True | | b"Warning: Permanently added 'red01' (...) |

| | | | to the list of known hosts.\r\n" |

| red02 | True | | b"Warning: Permanently added 'red02' (...) |

| | | | to the list of known hosts.\r\n" |

| red03 | True | | b"Warning: Permanently added 'red03' (...) |

| | | | to the list of known hosts.\r\n" |

| red04 | True | | b"Warning: Permanently added 'red04' (...) |

| | | | to the list of known hosts.\r\n" |

+-------+---------+--------+--------------------------------------------------+

In order for you to be able to successfully disable StrictHostKeyChecking, you can pass along filename that includes a customization. Here is an example on how to disable StrictHostKeyChecking on the subnet 10.1.1.1 We assume you have the following in the file subnet.conf:

Host 10.1.1.*

StrictHostKeyChecking no

Now you can invoke the command with:

(ENV3) you@yourlaptop $ cms host key access red,red0[1-4] subnet.conf

cms on a PiCloudmesh provides some very useful commands. Hence it can be of advantage to install it on the PIs. This is very simple with a onle line curl command

| |

This will not only install cms, but will also upgrade your system,

install the dependencies for cms, and create a virtual

environment. Because a system upgrade takes place, this command may

take several minutes to run.

After a reboot, we can verify the success of the script with the following:

| |

Documented commands (type help <topic>):

========================================

EOF check default help pause quit start test

admin clear diagram host pi set stop var

banner commands dryrun info provider shell stopwatch version

bridge config echo inventory py sleep sys

burn debug gui man q ssh term

This feature is implicitly included in the burn command, and you will have an inventory created automatically.

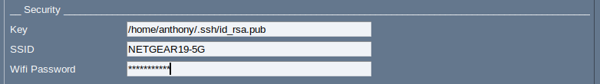

Cloudmesh has a simple system for managing cluster configurations as

an inventory. We do this management for you, but you can control it

also from the command line. We can first add a manager with cluster

subnet IP 10.1.1.1. We also add the bridge service, which is

recognized by cms as the WIFI bridge service connecting devices on

eth0 to the internet. We also set the timezone and locale here. You

may want to change them as you wish.

As we want to make the cluster very easy to create, we demonstrated in Section 5 how to create a default cluster directly from the burn command. As a future feature, this behavior will also be implemented into the inventory command. To make a default inventory named inventory-red.yaml:

you@yourlaptop $ cms inventory add cluster "red,red[01-04]"

This command will find your current WiFi SSID, and your current locale and set up a simple network as depicted in Figure 1 on your cluster. In case you have more or fewer nodes, the command will make appropriate updates.

For a custom cluster, you can inspect the parameters of the inventory command. Here are the commands to use for the previous setup while writing them out. You can modify the parameters to your liking:

you@yourlaptop $ cms inventory add red --service=manager --ip=10.1.1.1 --tag="latest-lite" --timezone="America/Indiana/Indianapolis" --locale="us" --inventory="inventory-red.yaml"

you@yourlaptop $ cms inventory set red services to "bridge,wifi" --listvalue --inventory="inventory-red.yaml"

We can then add the workers

you@yourlaptop $ cms inventory add "red0[1-4]" --service=worker --ip="10.1.1.[2-5]" --router=10.1.1.1 --tag="latest-lite" --timezone="America/Indiana/Indianapolis" --locale="us" --inventory="inventory-red.yaml"

you@yourlaptop $ cms inventory set "red0[1-4]" dns to "8.8.8.8,8.8.4.4" --listvalue --inventory="inventory-red.yaml"

Note we are using Google’s DNS here [8.8.8.8, 8.8.4.4]

Our cluster configuration is now complete. You may run the following to list your configuration. We include ours for a sanity check:

you@yourlaptop $ cms inventory list --inventory="inventory-red.yaml"

+-------+-------------+---------+---------+--------------------+----------+------------------------+----------+--------+------------------------------+--------+---------+-------------+-------------------+

| host | tag | cluster | service | services | ip | dns | router | locale | timezone | owners | comment | description | keyfile |

+-------+-------------+---------+---------+--------------------+----------+------------------------+----------+--------+------------------------------+--------+---------+-------------+-------------------+

| red | latest-lite | | manager | ['bridge', 'wifi'] | 10.1.1.1 | | | us | America/Indiana/Indianapolis | | | | ~/.ssh/id_rsa.pub |

| red01 | latest-lite | | worker | | 10.1.1.2 | ['8.8.8.8', '8.8.4.4'] | 10.1.1.1 | us | America/Indiana/Indianapolis | | | | ~/.ssh/id_rsa.pub |

| red02 | latest-lite | | worker | | 10.1.1.3 | ['8.8.8.8', '8.8.4.4'] | 10.1.1.1 | us | America/Indiana/Indianapolis | | | | ~/.ssh/id_rsa.pub |

| red03 | latest-lite | | worker | | 10.1.1.4 | ['8.8.8.8', '8.8.4.4'] | 10.1.1.1 | us | America/Indiana/Indianapolis | | | | ~/.ssh/id_rsa.pub |

| red04 | latest-lite | | worker | | 10.1.1.5 | ['8.8.8.8', '8.8.4.4'] | 10.1.1.1 | us | America/Indiana/Indianapolis | | | | ~/.ssh/id_rsa.pub |

+-------+-------------+---------+---------+--------------------+----------+------------------------+----------+--------+------------------------------+--------+---------+-------------+-------------------+

You can now specify your inventory as you burn your cluster or specific machines from the cluster with the burn command. All hosts data found in the inventory will be written to the machines, regardless if they are in the burn command or not.

Burn the whole cluster.

(ENV3) (admin) you@yourlaptop $ cms burn raspberry "red,red0[1-4]" --device=/dev/sdX

--inventory="inventory-red.yaml"

Burn a specific machine.

(ENV3) (admin) you@yourlaptop $ cms burn raspberry "red03" --device=/dev/sdX --inventory="inventory-red.yaml"

In case you reburn a SDCard and use it in your cluster, you will get a warning once you try to ssh into the machine. To remove the error simply execute the command

you@yourlaptop $ ssh-keygen -R HOSTNAME

where hostname is either the hostname or the ip address of your machine. that is registered in known hosts. To see the list, please use

you@yourlaptop $ cat ~/.ssh/known_hosts

Note that the lite tag is the same as lite-32 and full the same

as full-32. We added them for increased consistency and visibility.

We may drop in the future the lite and full tag so users are

forced to be aware of the 32 and 64 bit versions with

you@yourlaptop $ cms burn image versions --refresh

+---------------------------+------------+-------------+-------------+-----------------------------------------+

| Tag | Date | OS | Type | Version |

|---------------------------+------------+-------------+-------------+-----------------------------------------|

| lite-2020-05-28 | 2020-05-28 | raspberryos | lite | raspios_lite_armhf-2020-05-28 |

| lite-2020-08-24 | 2020-08-24 | raspberryos | lite | raspios_lite_armhf-2020-08-24 |

| lite-2020-12-04 | 2020-12-04 | raspberryos | lite | raspios_lite_armhf-2020-12-04 |

| lite-2021-01-12 | 2021-01-12 | raspberryos | lite | raspios_lite_armhf-2021-01-12 |

| lite-2021-03-25 | 2021-03-25 | raspberryos | lite | raspios_lite_armhf-2021-03-25 |

| lite-2021-05-28 | 2021-05-28 | raspberryos | lite | raspios_lite_armhf-2021-05-28 |

| lite-2021-11-08 | 2021-11-08 | raspberryos | lite | raspios_lite_armhf-2021-11-08 |

| lite-2022-01-28 | 2022-01-28 | raspberryos | lite | raspios_lite_armhf-2022-01-28 |

| lite-2022-04-07 | 2022-04-07 | raspberryos | lite | raspios_lite_armhf-2022-04-07 |

| latest-lite | 2022-04-07 | raspberryos | lite | raspios_lite_armhf-2022-04-07 |

| full-2020-05-28 | 2020-05-28 | raspberryos | full | raspios_full_armhf-2020-05-28 |

| full-2020-08-24 | 2020-08-24 | raspberryos | full | raspios_full_armhf-2020-08-24 |

| full-2020-12-04 | 2020-12-04 | raspberryos | full | raspios_full_armhf-2020-12-04 |

| full-2021-01-12 | 2021-01-12 | raspberryos | full | raspios_full_armhf-2021-01-12 |

| full-2021-03-25 | 2021-03-25 | raspberryos | full | raspios_full_armhf-2021-03-25 |

| full-2021-05-28 | 2021-05-28 | raspberryos | full | raspios_full_armhf-2021-05-28 |

| full-2021-11-08 | 2021-11-08 | raspberryos | full | raspios_full_armhf-2021-11-08 |

| full-2022-01-28 | 2022-01-28 | raspberryos | full | raspios_full_armhf-2022-01-28 |

| full-2022-04-07 | 2022-04-07 | raspberryos | full | raspios_full_armhf-2022-04-07 |

| latest-full | 2022-04-07 | raspberryos | full | raspios_full_armhf-2022-04-07 |

| lite-2020-05-28 | 2020-05-28 | raspberryos | lite-32 | raspios_lite_armhf-2020-05-28 |

| lite-2020-08-24 | 2020-08-24 | raspberryos | lite-32 | raspios_lite_armhf-2020-08-24 |

| lite-2020-12-04 | 2020-12-04 | raspberryos | lite-32 | raspios_lite_armhf-2020-12-04 |

| lite-2021-01-12 | 2021-01-12 | raspberryos | lite-32 | raspios_lite_armhf-2021-01-12 |

| lite-2021-03-25 | 2021-03-25 | raspberryos | lite-32 | raspios_lite_armhf-2021-03-25 |

| lite-2021-05-28 | 2021-05-28 | raspberryos | lite-32 | raspios_lite_armhf-2021-05-28 |

| lite-2021-11-08 | 2021-11-08 | raspberryos | lite-32 | raspios_lite_armhf-2021-11-08 |

| lite-2022-01-28 | 2022-01-28 | raspberryos | lite-32 | raspios_lite_armhf-2022-01-28 |

| lite-2022-04-07 | 2022-04-07 | raspberryos | lite-32 | raspios_lite_armhf-2022-04-07 |

| latest-lite-32 | 2022-04-07 | raspberryos | lite-32 | raspios_lite_armhf-2022-04-07 |

| full-2020-05-28 | 2020-05-28 | raspberryos | full-32 | raspios_full_armhf-2020-05-28 |

| full-2020-08-24 | 2020-08-24 | raspberryos | full-32 | raspios_full_armhf-2020-08-24 |

| full-2020-12-04 | 2020-12-04 | raspberryos | full-32 | raspios_full_armhf-2020-12-04 |

| full-2021-01-12 | 2021-01-12 | raspberryos | full-32 | raspios_full_armhf-2021-01-12 |

| full-2021-03-25 | 2021-03-25 | raspberryos | full-32 | raspios_full_armhf-2021-03-25 |

| full-2021-05-28 | 2021-05-28 | raspberryos | full-32 | raspios_full_armhf-2021-05-28 |

| full-2021-11-08 | 2021-11-08 | raspberryos | full-32 | raspios_full_armhf-2021-11-08 |

| full-2022-01-28 | 2022-01-28 | raspberryos | full-32 | raspios_full_armhf-2022-01-28 |

| full-2022-04-07 | 2022-04-07 | raspberryos | full-32 | raspios_full_armhf-2022-04-07 |

| latest-full-32 | 2022-04-07 | raspberryos | full-32 | raspios_full_armhf-2022-04-07 |

| lite_arm64-2020-08-24 | 2020-08-24 | raspberryos | lite-64 | raspios_lite_arm64-2020-08-24 |

| lite_arm64-2021-04-09 | 2021-04-09 | raspberryos | lite-64 | raspios_lite_arm64-2021-04-09 |

| lite_arm64-2021-05-28 | 2021-05-28 | raspberryos | lite-64 | raspios_lite_arm64-2021-05-28 |

| lite_arm64-2021-11-08 | 2021-11-08 | raspberryos | lite-64 | raspios_lite_arm64-2021-11-08 |

| lite_arm64-2022-01-28 | 2022-01-28 | raspberryos | lite-64 | raspios_lite_arm64-2022-01-28 |

| lite_arm64-2022-04-07 | 2022-04-07 | raspberryos | lite-64 | raspios_lite_arm64-2022-04-07 |

| latest-lite-64 | 2022-04-07 | raspberryos | lite-64 | raspios_lite_arm64-2022-04-07 |

| arm64-2020-05-28 | 2020-05-28 | raspberryos | full-64 | raspios_arm64-2020-05-28 |

| arm64-2020-08-24 | 2020-08-24 | raspberryos | full-64 | raspios_arm64-2020-08-24 |

| arm64-2021-04-09 | 2021-04-09 | raspberryos | full-64 | raspios_arm64-2021-04-09 |

| arm64-2021-05-28 | 2021-05-28 | raspberryos | full-64 | raspios_arm64-2021-05-28 |

| arm64-2021-11-08 | 2021-11-08 | raspberryos | full-64 | raspios_arm64-2021-11-08 |

| arm64-2022-01-28 | 2022-01-28 | raspberryos | full-64 | raspios_arm64-2022-01-28 |

| arm64-2022-04-07 | 2022-04-07 | raspberryos | full-64 | raspios_arm64-2022-04-07 |

| latest-full-64 | 2022-04-07 | raspberryos | full-64 | raspios_arm64-2022-04-07 |

| oldstable_lite-2021-12-02 | 2021-12-02 | raspberryos | lite-legacy | raspios_oldstable_lite_armhf-2021-12-02 |

| oldstable_lite-2022-01-28 | 2022-01-28 | raspberryos | lite-legacy | raspios_oldstable_lite_armhf-2022-01-28 |

| oldstable_lite-2022-04-07 | 2022-04-07 | raspberryos | lite-legacy | raspios_oldstable_lite_armhf-2022-04-07 |

| latest-lite-legacy | 2022-04-07 | raspberryos | lite-legacy | raspios_oldstable_lite_armhf-2022-04-07 |

| oldstable-2021-12-02 | 2021-12-02 | raspberryos | full-legacy | raspios_oldstable_armhf-2021-12-02 |

| oldstable-2022-01-28 | 2022-01-28 | raspberryos | full-legacy | raspios_oldstable_armhf-2022-01-28 |

| oldstable-2022-04-07 | 2022-04-07 | raspberryos | full-legacy | raspios_oldstable_armhf-2022-04-07 |

| latest-full-legacy | 2022-04-07 | raspberryos | full-legacy | raspios_oldstable_armhf-2022-04-07 |

| ubuntu-20.04.2-64-bit | 2021-02-01 | ubuntu | ubuntu | 20.04.2&architecture=server-arm64+raspi |

| ubuntu-20.04.2-32-bit | 2021-02-01 | ubuntu | ubuntu | 20.04.2&architecture=server-armhf+raspi |

| ubuntu-20.10-64-bit | 2021-02-01 | ubuntu | ubuntu | 20.10&architecture=server-arm64+raspi |

| ubuntu-20.10-32-bit | 2021-02-01 | ubuntu | ubuntu | 20.10&architecture=server-armhf+raspi |

| ubuntu-desktop | 2021-02-01 | ubuntu | ubuntu | 20.10&architecture=desktop-arm64+raspi |

+---------------------------+------------+-------------+-------------+-----------------------------------------+

you@yourlaptop $ cms burn image get latest-lite

you@yourlaptop $ cms burn image get latest-fll

The images are stored in the .cloudmesh/cmburn/images directory

and can be removed with the command

you@yourlaptop $ rm -i ~/.cloudmesh/cmburn/images/*

We also provide a list of related tutorials for other operating systems. This includes

Cluster with RaspberryOS on it:

Cluster with Ubuntu on it:

We have the following tutorials also on other web pages. Here is a list

Hackaday:

Piplanet:

Many tutorials are available at

We would like to thank Venkata (DK) Kolli github.com/dkkolli for his contributions to this project. The timezone preference was contributed by him. Note that the timezone preference is not working when burning is conducted from a PI. We are looking for contributors that fix this issue.

Here comes a small abstract

Learning Objectives

Topics covered

Warning

This is a warning notice. Be warned!

Tip

This is a very good tip.

Note

This is a very good tip.

Info

This is a very good tip.

Sections should be umbered

| Firstname | Lastname |

|---|---|

| Gregor | von Laszewski |

participant participant as Foo

actor actor as Foo1

boundary boundary as Foo2

control control as Foo3

entity entity as Foo4

database database as Foo5

collections collections as Foo6

queue queue as Foo7

Foo -> Foo1 : To actor

Foo -> Foo2 : To boundary

Foo -> Foo3 : To control

Foo -> Foo4 : To entity

Foo -> Foo5 : To database

Foo -> Foo6 : To collections

Foo -> Foo7: To queue

In this tutorial we will deploy and demonstrate MPI for use across a cluster of Raspberry Pis. The Pis can run Ubuntu or Raspberry OS. This tutorial is part ofa larger effort to use Python for MPI programming.

Learning Objectives

Topics covered

Modern AI and Deep Learning algorithms require immense processing power to churn through big data. In this tutorial we show how to install and try out MPI on a cluster of Raspberry Pis. MPI gives developers access to a powerful set of parallel processing, synchronization, and communication tools to develop thier algorithms in a scalable manner on clustesr. mpi4py gives developers access to MPI from Python allowing easy integration into Python applications.

This tutorial assumes a cluster burned using the convenient cloudmesh burn program. It has the advantage that the cluster is preconfigured for you at burn time of the SD Cards. We assume that you use one of the following methods:

The tutorial supports both Raspberry OS and Ubuntu.

We use cloudmesh shell (cms) to provide you with some easy to use commands that address deployment of MPI on the cluster.

The MPI commands available from cms are:

$ cms mpi deploy raspberry HOSTS

$ cms mpi deploy ubuntu HOSTS

$ cms mpi uninstall raspberry HOSTS

$ cms mpi uninstall ubuntu HOSTS

The commands can be installed as follows:

$ cd cm

$ cloudmesh-installer get mpi

We can install MPI on a cluster of Pis using the following command. The installation is done in parallel to scale with the number of hosts. Make sure to choose the argument that corresponds to the OS installed on the Raspberry Pis. In this example we will demonstrate the installation on a cluster of Pis running Raspberry OS.

Required python packages are installed, followed by MPI and mpi4py, and then the version of MPI installed is checked.

A return code of 0 indicates command success, any other indicates a failure.

(ENV3) you@your-laptop:~ $ cms mpi deploy raspberry "red,red0[1-3]"

mpi deploy raspberry red,red0[1-3]

# ----------------------------------------------------------------------

# Install MPI on hosts

# ----------------------------------------------------------------------

INFO: Installing essential python packages

INFO: sudo apt-get install python3-venv python3-wheel python3-dev build-essential python3-pip -y; pip3 install pip -U; python3 -m venv ~/ENV3

INFO: This may take several minutes

+-------+------------+--------+

| host | returncode | stderr |

+-------+------------+--------+

| red | 0 | |

| red01 | 0 | |

| red02 | 0 | |

| red03 | 0 | |

+-------+------------+--------+

INFO: Installing mpi on hosts

INFO: sudo apt-get install openmpi-bin -y; source ~/ENV3/bin/activate; pip3 install mpi4py

INFO: This may take several minutes

+-------+------------+--------+

| host | returncode | stderr |

+-------+------------+--------+

| red | 0 | |

| red01 | 0 | |

| red02 | 0 | |

| red03 | 0 | |

+-------+------------+--------+

INFO: Checking version of mpi on hosts

INFO: mpicc --showme:version

+-------+------------+--------+-------------------------------------+

| host | returncode | stderr | stdout |

+-------+------------+--------+-------------------------------------+

| red | 0 | | mpicc: Open MPI 3.1.3 (Language: C) |

| red01 | 0 | | mpicc: Open MPI 3.1.3 (Language: C) |

| red02 | 0 | | mpicc: Open MPI 3.1.3 (Language: C) |

| red03 | 0 | | mpicc: Open MPI 3.1.3 (Language: C) |

+-------+------------+--------+-------------------------------------+

To test MPI functionality on a single host you can run the following command to run 4 processes on a single Pi.

(ENV3) pi@red:~ $ mpiexec -n 4 python3 -m mpi4py.bench helloworld

Hello, World! I am process 0 of 4 on red.

Hello, World! I am process 1 of 4 on red.

Hello, World! I am process 2 of 4 on red.

Hello, World! I am process 3 of 4 on red.

NOTE: This example does not currently work with mpich installed on ubuntu, but it does with openmpi.

To test MPI functionality on a cluster you can run the following command.

NOTE: This command may silently fail if one of the hosts is not in the ~/.ssh/known_hosts file of the executing machine. To ensure it is present, connect to it via ssh first,

ssh red01, and approve adding the host key to the known_host file.

(ENV3) pi@red:~ $ mpiexec -n 4 -host red,red01,red02,red03 ~/ENV3/bin/python -m mpi4py.bench helloworld

Hello, World! I am process 0 of 3 on red.

Hello, World! I am process 1 of 3 on red01.

Hello, World! I am process 2 of 3 on red02.

Hello, World! I am process 2 of 3 on red03.

However, this will only run one process per host. A raspberry Pi 4 has 4 vprocessors. We can make a machine file to declare this information and then use mpirun and the machinefile to use all available processors.

Create a file named machinefile with the following content.

pi@red slots=4

pi@red01 slots=4

pi@red02 slots=4

pi@red03 slots=4

Now we can run the same helloworld example while using all available processors.

(ENV3) pi@red:~ $ mpirun -np 16 -machinefile ./machinefile ~/ENV3/bin/python -m mpi4py.bench helloworld

Hello, World! I am process 0 of 16 on red.

Hello, World! I am process 1 of 16 on red.

Hello, World! I am process 2 of 16 on red.

Hello, World! I am process 3 of 16 on red.

Hello, World! I am process 4 of 16 on red01.

Hello, World! I am process 6 of 16 on red01.

Hello, World! I am process 5 of 16 on red01.

Hello, World! I am process 7 of 16 on red01.

Hello, World! I am process 8 of 16 on red02.

Hello, World! I am process 9 of 16 on red02.

Hello, World! I am process 10 of 16 on red02.

Hello, World! I am process 11 of 16 on red02.

Hello, World! I am process 12 of 16 on red03.

Hello, World! I am process 13 of 16 on red03.

Hello, World! I am process 14 of 16 on red03.

Hello, World! I am process 15 of 16 on red03.

You can unistall MPI in the following manner.

(ENV3) you@yourlaptop:~ $ cms mpi uninstall raspberry "red,red0[1-3]"

mpi uninstall raspberry red,red0[1-3]

# ----------------------------------------------------------------------

# Uninstall MPI on hosts

# ----------------------------------------------------------------------

INFO: Uninstalling mpi on hosts

INFO: sudo apt-get --purge remove openmpi-bin -y; source ~/ENV3/bin/activate; pip3 uninstall mpi4py -y"

+-------+------------+--------+

| host | returncode | stderr |

+-------+------------+--------+

| red | 0 | |

| red01 | 0 | |

| red02 | 0 | |

| red03 | 0 | |

+-------+------------+--------+

Usage:

mpi deploy raspberry HOSTS

mpi deploy ubuntu HOSTS

mpi uninstall raspberry HOSTS

mpi uninstall ubuntu HOSTS

This command is used to install MPI on a cluster running Raspberry Pi OS or Ubuntu OS.

Arguments:

HOSTS parameterized list of hosts

Description:

mpi deploy raspberry HOSTS

Will deploy mpi for raspberry OS HOSTS in a parallel manner and return installation results.

mpi deploy ubuntu HOSTS

Will deploy mpi for ubuntu OS HOSTS (possibly running on raspberry pi platform) in a parallel manner

and return installation results.

mpi uninstall raspberry HOSTS

Will uninstall mpi packagess from raspberry OS HOSTS.

mpi uninstall ubuntu HOSTS

Will uninstall mpi packages from ubuntu OS HOSTS.

To learn more about MPI4PY, including examples of communication mechanisms, please see this report.

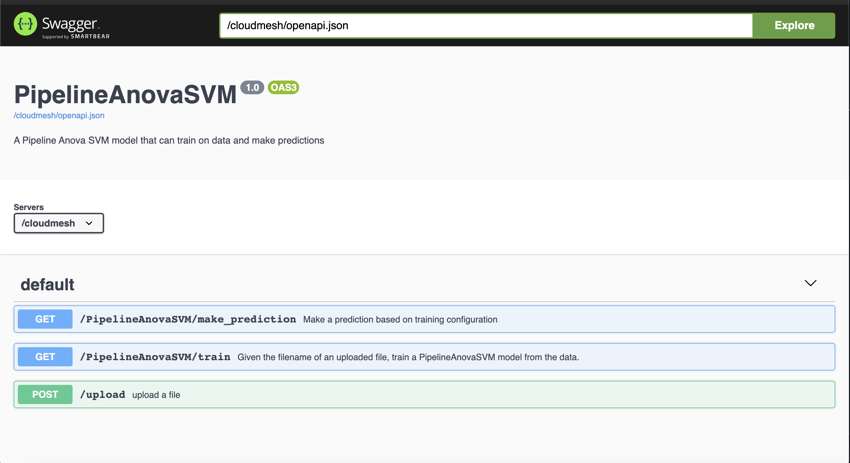

In this tutorial we will use a cms-burn created cluster to install Docker, create a Docker container for the cloudmesh-openapi service, deploy the container on Docker, install K3s, deploy the container on K3s, and enable access to the container from an external host. Finally, automatically deploy an OpenApi service onto a K3s cluster given only its python and yaml files.

This is currently (4/29/21) tested on a Raspberry pi using Ubuntu OS.

Learning Objectives

Topics covered

Many tutorials walk you through individual components of our learning objectives. This tutorial will walk you through step-by-step to create a service and deploy it on a cms-brun created cluster.

This tutorial assumes a cluster burned using one of the following methods:

Burn a Raspberry OS Cluster (not currently tested)

Burn an Ubuntu Cluster (currently tested)

Access your cluster manager (red) and get the cloudmesh Docker installer.

laptop$ ssh red

red$ cd cm

red$ cloudmesh-installer get docker-command

red$ cd ..

Now we install Docker.

red$ cms docker deploy --host=localhost

Let us add our user to the Docker group so we can execute Docker comands without sudo.

red$ sudo usermod -aG docker ubuntu

Let us verify the install by checking the version.

red$ docker version

We will create a file named Dockerfile in a directory

cloudmesh-openapi-container. Substitute your prefered editor if you do not

like emacs.

red$ mkdir cloudmesh-openapi-container

red$ cd cloudmesh-openapi-container

red$ emacs Dockerfile

In Dockerfile add the following lines. This provides Docker the commands

needed to install cloudmesh-openapi

and the needed packages for our PipleLineAnovaSVM example into a Ubuntu

container.

# syntax=docker/dockerfile:1

FROM ubuntu:20.04

RUN mkdir cm

RUN apt update

RUN apt install python3-venv python3-wheel python3-dev python3-pip build-essential -y

RUN ln -s /usr/bin/pip3 /usr/bin/pip

RUN pip install pip -U

RUN pip install cloudmesh-installer

RUN apt install git -y

RUN cd cm; cloudmesh-installer get openapi

RUN pip install sklearn pandas

ARG DEBIAN_FRONTEND=noninteractive

ENV TZ=America/Indiana/Indianapolis

RUN apt install tzdata -y

RUN apt install emacs -y

CMD /bin/bash

Now we build an image based on the instructions provided in Dockerfile. We

name the image cloudmesh-openapi. The . instructs Docker to use the

Dockerfile in the present working directory.

red$ docker build -t cloudmesh-openapi .

Now we start a Docker containe using our cloudmesh-openapi image. The

-it provide an interactive terminal, the -p maps the container port 8080

to the host port 8080, and /bin/bash is the command to run in the container.

red$ docker run -it -p 8080:8080 cloudmesh-openapi /bin/bash

Generate the service yaml.

container$ cms openapi generate PipelineAnovaSVM \

--filename=./tests/Scikitlearn-experimental/sklearn_svm.py \

--import_class \

--enable_upload

Start the service (defaults to port 8080). Leave this running and go to a new terminal.

container$ cms openapi server start ./tests/Scikitlearn-experimental/sklearn_svm.yaml --host=0.0.0.0

In another terminal on your laptop you can now access the service from

red.local:8080 because we mapped the container port 8080 to the host port 8080.

In a web browser go to http://red.local:8080/cloudmesh/ui to access the

web UI of the service.

Alternatively, you can access the service directly from the command line of your laptop.

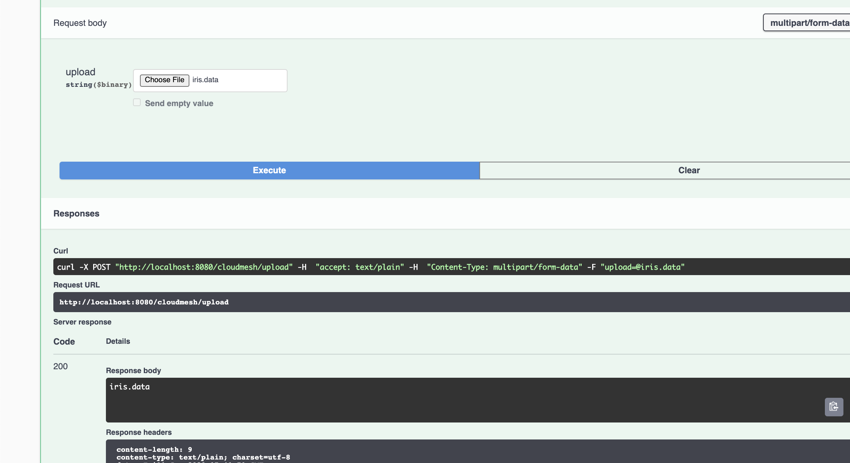

laptop$ curl -L -o iris.data "https://raw.githubusercontent.com/cloudmesh/cloudmesh-openapi/main/tests/Scikitlearn-experimental/iris.data"

laptop$ export CMSIP=red.local:8080

laptop$ curl -X POST "http://$CMSIP/cloudmesh/upload" -H "accept: text/plain" -H "Content-Type: multipart/form-data" -F "upload=@iris.data"

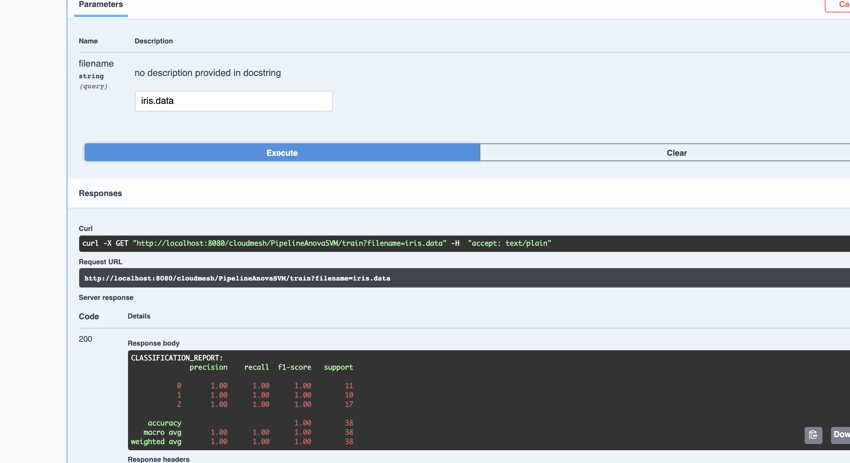

laptop$ curl -X GET "http://$CMSIP/cloudmesh/PipelineAnovaSVM/train?filename=iris.data" -H "accept: text/plain"

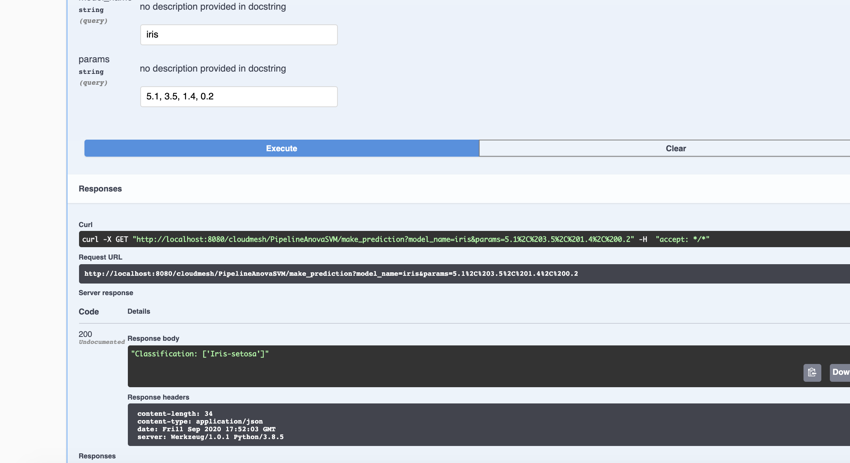

laptop$ curl -X GET "http://$CMSIP/cloudmesh/PipelineAnovaSVM/make_prediction?model_name=iris¶ms=5.1%2C%203.5%2C%201.4%2C%200.2" -H "accept: */*"

You can now ctrl-C and exit the running container to stop the service.

We need to save the Docker image so we can provide it to our K3s cluster. alternatively you can upload it to a Docker registry like DockerHub.

We save our image in a tarfile named cloudmesh-openapi.tar.

red$ docker save --output cloudmesh-openapi.tar cloudmesh-openapi:latest

We will reference this file later to import it to our K3s cluster.

Enable containers in the kernel, and wait for the cluster to reboot.

laptop$ cms pi k3 enable containers red,red[01-03]

Now install K3s

laptop$ cms pi k3 install cluster red,red0[1-3]

Verify all nodes show up.

laptop$ cms pi k3 cluster info

cloudmesh-openapi Image into All K3s NodesAs we are not using an image repository, we need to copy our

cloudmesh-openapi.tar file to all k3s nodes and import them image into

K3s.

red$ cms pi k3 import image red,red0[1-3] cloudmesh-openapi.tar /home/ubuntu

pi k3 import image red,red0[1-3] cloudmesh-openapi.tar /home/ubuntu

INFO: Copying image to ['red', 'red01', 'red02', 'red03'] using source: cloudmesh-openapi.tar and destination: /home/ubuntu

INFO: This may take a few minutes depending on the file size and number of destinations

+---------+---------+--------+

| host | success | stdout |

+---------+---------+--------+

| red | True | |

| red01 | True | |

| red02 | True | |

| red03 | True | |

+---------+---------+--------+

INFO: Import image on ['red', 'red01', 'red02', 'red03'] using filepath:/home/ubuntu/cloudmesh-openapi.tar

+---------+---------+--------------------------------------------------+

| host | success | stdout |

+---------+---------+--------------------------------------------------+

| red | True | unpacking docker.io/library/cloudmesh- |

| | | openapi:latest (sha256:829d62dafbb8c3335804517d6 |

| | | 00313e64be1983b93286328f6c0f66f7c4781ad)...done |

| red01 | True | unpacking docker.io/library/cloudmesh- |

| | | openapi:latest (sha256:829d62dafbb8c3335804517d6 |

| | | 00313e64be1983b93286328f6c0f66f7c4781ad)...done |

| red02 | True | unpacking docker.io/library/cloudmesh- |

| | | openapi:latest (sha256:829d62dafbb8c3335804517d6 |

| | | 00313e64be1983b93286328f6c0f66f7c4781ad)...done |

| red03 | True | unpacking docker.io/library/cloudmesh- |

| | | openapi:latest (sha256:829d62dafbb8c3335804517d6 |

| | | 00313e64be1983b93286328f6c0f66f7c4781ad)...done |

+---------+---------+--------------------------------------------------+

Validate the container is present.

red$ sudo k3s ctr images list

To create Pod in Kubernetes, you need to define a YAML file. We create one

named cloudmesh-openapi-pod.yaml. The pod is the smallest deployable unit

of computing in K8s, and is a group of one or more containers. In our case one.

Create cloudmesh-openapi-pod.yaml and paste the following lines in the

document.

apiVersion: v1

kind: Pod

metadata:

name: cloudmesh-openapi-pod

labels:

app: cloudmesh-openapi-pod

spec:

containers:

- name: cloudmesh-openapi

image: cloudmesh-openapi:latest

imagePullPolicy: Never

command:

- "sleep"

- "604800"

This will define a pod named cloudmesh-openapi-pod, containing

one container named cloudmesh-openapi based on our

cloudmesh-openapi:latest image, running the command sleep 604800. This

will essentially keep our container running for 7 days and then it will

close and restart based on the default restart policy: ALWAYS. The . metadata.label.app is important as it allows us to select our pod in our

in our following load-balancer service. The .spec.imagePullPolicy: Never

is also important as it prevents K8s from trying to download our image from

any repository and instead use the local copy.

Deploy your pod by applying the cloudmesh-openapi-pod.yaml configuration.

red$ sudo kubectl apply -f ./cloudmesh-openapi-pod.yaml

Check that the pod is running.

red$ sudo kubectl get pods

We need to start a shell in our Pod so we can generate and run the cloudmesh-openapi service.

red$ sudo kubectl exec --stdin --tty cloudmesh-openapi-pod -- /bin/bash

We can now run our serice generate and start command.

container$ cms openapi generate PipelineAnovaSVM \

--filename=./tests/Scikitlearn-experimental/sklearn_svm.py \

--import_class \

--enable_upload

container$ cms openapi server start ./tests/Scikitlearn-experimental/sklearn_svm.yaml --host=0.0.0.0

You can now exit and the container and the service will continue running.

Kubernetes services expose your cluster Pods to external networks. K3s comes with a build int load balancer that watches for Kubernetes LoadBalancer services, and then deploys the necessary network infrastructure to make your service accessible.

Create a file names cloudmesh-openapi-lb-service.yaml and paste the

following lines.

apiVersion: v1

kind: Service

metadata:

name: cloudmesh-openapi-lb-service

spec:

selector:

app: cloudmesh-openapi-pod

ports:

- protocol: TCP

port: 8080

targetPort: 8080

nodePort: 30000

type: LoadBalancer

It is important that the .spec.selector.app matches that defined in your

pod yaml. The port is the port accessible on cluster internal nodes, the

targetPort is the port in the container to which traffic will be forwarded,

and the nodePort is the port on cluster external networks can access the

service. This must match a predefined range.

Deploy the loadbalancer.

red$ sudo kubectl apply -f ./cloudmesh-openapi-lb.service.yaml

Check to ensure it deployed.

red$ sudo kubectl get services -o wide

Check to see the load balancer pods are deployed on all agent nodes.

red$ sudo kubectl get pods

cloudmesh-openapi PodBecause the loadbalancer is running on all nodes and forwarding traffic to

the existing pod, we can now reach our service from our laptop at red.local:30000. For some

reason red.local:8080 works as well, I can’t explain that yet.

On your laptop web browser browse to http://red.local:30000/cloudmesh/ui

Alternatively, interact with the service from the command line.

laptop$ curl -L -o iris.data "https://raw.githubusercontent.com/cloudmesh/cloudmesh-openapi/main/tests/Scikitlearn-experimental/iris.data"

laptop$ export CMSIP=red.local:30000

laptop$ curl -X POST "http://$CMSIP/cloudmesh/upload" -H "accept: text/plain" -H "Content-Type: multipart/form-data" -F "upload=@iris.data"

laptop$ curl -X GET "http://$CMSIP/cloudmesh/PipelineAnovaSVM/train?filename=iris.data" -H "accept: text/plain"

laptop$ curl -X GET "http://$CMSIP/cloudmesh/PipelineAnovaSVM/make_prediction?model_name=iris¶ms=5.1%2C%203.5%2C%201.4%2C%200.2" -H "accept: */*"

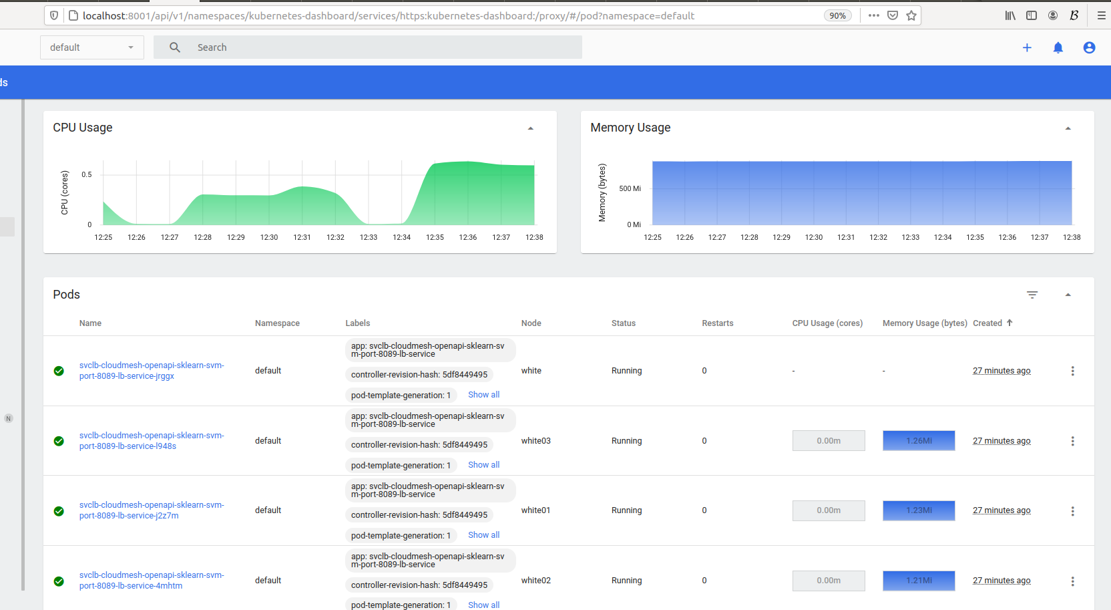

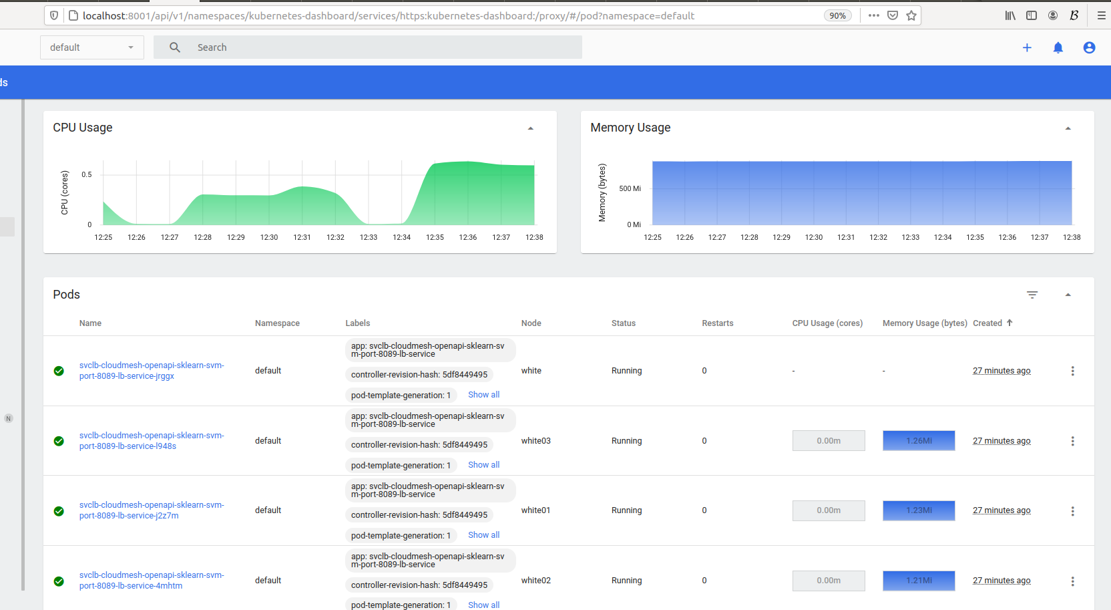

We have provided a command that can automatically deploy an OpenAPI service given the YAML file, python file, the server, and the ports you want it deployed on. We expect the user to have previously used cloudmesh-openapi to generate these files as needed.

cms pi k3 api deploy SERVER PORTS YAML PYTHON

Below is an example command invocation where whe deploy the Scikilearn Pipeline Anova SVM example as a service using the yaml file sklearn_svm.yaml and the python file sklearn_svm_upload-enabled.py

laptop$ cms pi k3 api deploy red 80[80-85] sklearn_svm.yaml sklearn_svm_upload-enabled.py

pi k3 api deploy red 80[80-85] sklearn_svm.yaml sklearn_svm_upload-enabled.py

INFO: Deploying cloudmesh openapi service based on yaml:sklearn_svm.yaml

python file: sklearn_svm_upload-enabled.py to ports: ['8080', '8081', '8082','8083', '8084', '8085'] on server red

INFO: Deploying service for port: 8080

INFO: Deploying service for port: 8081

INFO: Deploying service for port: 8082

INFO: Deploying service for port: 8083

INFO: Deploying service for port: 8084

INFO: Deploying service for port: 8085

INFO: Services are available at:

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.43.0.1 <none> 443/TCP 39h

cloudmesh-openapi-sklearn-svm-port-8080-lb-service LoadBalancer 10.43.157.137 192.168.1.16 8080:30988/TCP 89s

cloudmesh-openapi-sklearn-svm-port-8081-lb-service LoadBalancer 10.43.105.151 192.168.1.16 8081:31733/TCP 69s

cloudmesh-openapi-sklearn-svm-port-8082-lb-service LoadBalancer 10.43.66.0 192.168.1.16 8082:30442/TCP 55s

cloudmesh-openapi-sklearn-svm-port-8083-lb-service LoadBalancer 10.43.212.54 192.168.1.16 8083:31632/TCP 33s

cloudmesh-openapi-sklearn-svm-port-8084-lb-service LoadBalancer 10.43.52.81 192.168.1.16 8084:30334/TCP 22s

cloudmesh-openapi-sklearn-svm-port-8085-lb-service LoadBalancer 10.43.238.192 192.168.1.16 8085:31442/TCP 8s

You can now access and interact with the service in the same manner as we conducted in section 17.

Next we will install a Kubernetes Dashboard so we can monitor the resource consumption of our services.

If using Raspberry OS on the pis, run:

you@your-laptop:~$ cms pi k3 dashboard create red

If using Ubuntu Server on the pis, run:

you@your-laptop:~$ cms pi k3 dashboard create red --ubuntu

Both commands will automatically start the dashboard. However, on Ubuntu Server, cms pi k3 dashboard start red needs to be run when the pi is rebooted.

We can easily access the Web UI Dashboard for easy management of our cluster.

First, let us connect to the new dashboard created above.

you@your-laptop:~$ cms pi k3 dashboard connect red

We should get a green “Connection created” message. We can now check on the status of our dashboard and obtain our token to log in.

you@your-laptop:~$ cms pi k3 dashboard info

pi k3 dashboard info

INFO: Finding running dashboards...

+---------------------+-------------+------------+------------------+-------+

| Server Access Point | Remote Port | Local Port | Dashboard Status | PID |

+---------------------+-------------+------------+------------------+-------+

| red | 8001 | 8001 | Active | 99240 |

+---------------------+-------------+------------+------------------+-------+

INFO: Dashboard Link:

http://localhost:8001/api/v1/namespaces/kubernetes-dashboard/services/https:kubernetes-dashboard:/proxy/

INFO: Fetching authentication token...

# A long token will go here

Note. If

Dasboard Statussays “Up but not ready”, the k3s dashboard is still likely in the process of coming online. Wait a few moments and try the info command again.

Take note of the authentication token given. To open the dashboard, you may click on the link provided by the info command or use the following command to automaticallly open in browser.

you@your-laptop:~$ cms pi k3 dashboard

pi k3 dashboard

Opening dashboard...

You can monitor your pods resources consumption on the Pods tab.

When ready, you can then disconnect from your dashboard with the following:

you@your-laptop:~$ cms pi k3 dashboard disconnect

While hte containers will persist after a reboot, the services do not currently automatically restart.

To restart the service suse the command

cms pi k3 api start SERVER PORTS YAML PYTHON

The YAML and PYTHON require the base filename only, they are not re-copied.

Here is an example invocation.

laptopl$ cms pi k3 api start red 80[80-89] sklearn_svm.yaml sklearn_svm_upload-enabled.py

pi k3 api start red 80[80-89] sklearn_svm.yaml sklearn_svm_upload-enabled.py

INFO: Starting services in on ports ['8080', '8081', '8082', '8083', '8084', '8085']

INFO: Service on 8080 successfully started

INFO: Service on 8081 successfully started

INFO: Service on 8082 successfully started

INFO: Service on 8083 successfully started

INFO: Service on 8084 successfully started

INFO: Service on 8085 successfully started

Useful kubectl commands to debug a broken pod/service

sudo kubectl describe pod cloudmesh-openapi-pod

sudo kubectl logs --previous cloudmesh-openapi-pod cloudmesh-openapi

sudo kubectl logs cloudmesh-openapi-pod cloudmesh-openapi

sudo kubectl delete pod cloudmesh-openapi-pod

sudo kubectl delete service clodmesh-openapi-lb-service

sudo kubectl get pods --show-labels

sudo kubectl edit pod cloudmesh-openapi-pod

If you have trouble accessing the pod shell, like below. Try stopping and restarting the K3s services.

(ENV3) ubuntu@red:~/cloudmesh-openapi-container$ sudo kubectl exec --stdin --tty cloudmesh-openapi-pod -- /bin/bash

error: unable to upgrade connection: Authorization error (user=kube-apiserver, verb=create, resource=nodes, subresource=proxy)

Stop and restart the K3s services.

red$ cms pi k3 stop agent red,red0[1-3]

red$ cms pi k3 stop server red

red$ cms pi k3 start server red

ree$ cms pi k3 start agent red,red0[1-3]

laptop$ cms pi k3 uninstall cluster red,red0[1-3]

red$ sudo apt-get purge docker-ce docker-ce-cli containerd.io

red$ sudo rm -rf /var/lib/docker

red$ sudo rm -rf /var/lib/containerd

Install, manage, operate, and uninstall K3s on a cluster of Raspberry Pis running Raspberry OS or Ubuntu.

Learning Objectives

cms.Topics covered

K3S provides a lightweight, single binary distribution of Kubernetes with very close feature parity to K8s that is perfect for single board computers like the Raspberry Pi. In this tutorial we will install, verify, and unistall K3s on a cluster of Pis that was created using the Cloudmesh burn software.

This tutorial assumes a cluster burned using one of the following methods:

The tutorial supports both Raspberry OS and Ubuntu with no required user input change.

pi k3 enable containers NAMES

pi k3 install server NAMES

pi k3 install agent NAMES SERVER

pi k3 install cluster NAMES

pi k3 uninstall server NAMES

pi k3 uninstall agent NAMES

pi k3 uninstall cluster NAMES

pi k3 kill NAMES

pi k3 start server NAMES

pi k3 start agent NAMES

pi k3 start cluster NAMES

pi k3 stop server NAMES

pi k3 stop agent NAMES

pi k3 stop cluster NAMES

pi k3 remove node NAMES SERVER

pi k3 cluster info SERVER

pi k3 dashboard create SERVER [--ubuntu]

pi k3 dashboard start SERVER

pi k3 dashboard connect SERVER

pi k3 dashboard disconnect [SERVER]

pi k3 dashboard info

pi k3 dashboard

pi k3 import image NAMES SOURCE DESTINATION

pi k3 api deploy SERVER PORTS YAML PYTHON

pi k3 api start SERVER PORTS YAML PYTHON

This command adds 'cgroup_enable=cpuset cgroup_memory=1 cgroup_enable=memory' to the end of the arguments in cmdline.txt if

they are not already present, and reboots the machines. This is required to

enable containers in the kernel.

you@your-laptop:~$ cms pi k3 enable containers red,red0[1-3]

pi k3 enable containers red,red0[1-3]

INFO: Enabling cgroups for ['red', 'red01', 'red02', 'red03']

+-------+---------+--------+

| host | success | stdout |

+-------+---------+--------+

| red | True | |

| red01 | True | |

| red02 | True | |

| red03 | True | |

+-------+---------+--------+

INFO: Executing `sudo reboot` for ['red01', 'red02', 'red03']

INFO: Executing `sudo reboot` for red

This command wil install a K3S cluster with the manager (red) acting as the K3s server, and the workers and the manager will be installed as agents of that server. This also installs and configures a Web UI Dashboard.

you@yourlaptop:~$ cms pi k3 install cluster red,red0[1-3]

pi k3 install cluster red,red0[1-3]

INFO: Installing K3s as stand-alone server on ['red']

+------+---------+--------------------------------------------------+

| host | success | stdout |

+------+---------+--------------------------------------------------+

| red | True | [INFO] Finding release for channel stable |

| | | [INFO] Using v1.20.5+k3s1 as release |

| | | [INFO] Downloading hash https://github.com/k3s- |

| | | io/k3s/releases/download/v1.20.5+k3s1/sha256sum- |

| | | arm.txt |

| | | [INFO] Downloading binary https://github.com/k3 |

| | | s-io/k3s/releases/download/v1.20.5+k3s1/k3s-armh |

| | | f |

| | | [INFO] Verifying binary download |

| | | [INFO] Installing k3s to /usr/local/bin/k3s |

| | | [INFO] Creating /usr/local/bin/kubectl symlink |

| | | to k3s |

| | | [INFO] Creating /usr/local/bin/crictl symlink |

| | | to k3s |

| | | [INFO] Creating /usr/local/bin/ctr symlink to |

| | | k3s |

| | | [INFO] Creating killall script |

| | | /usr/local/bin/k3s-killall.sh |

| | | [INFO] Creating uninstall script |

| | | /usr/local/bin/k3s-uninstall.sh |

| | | [INFO] env: Creating environment file |

| | | /etc/systemd/system/k3s.service.env |

| | | [INFO] systemd: Creating service file |

| | | /etc/systemd/system/k3s.service |

| | | [INFO] systemd: Enabling k3s unit |

| | | [INFO] systemd: Starting k3s |

+------+---------+--------------------------------------------------+

INFO: Installing K3s on red as agent of red

INFO: Fetching the server token

+------+---------+--------------------------------------------------+

| host | success | stdout |

+------+---------+--------------------------------------------------+

| red | True | [INFO] Finding release for channel stable |

| | | [INFO] Using v1.20.5+k3s1 as release |

| | | [INFO] Downloading hash https://github.com/k3s- |

| | | io/k3s/releases/download/v1.20.5+k3s1/sha256sum- |

| | | arm.txt |

| | | [INFO] Skipping binary downloaded, installed |

| | | k3s matches hash |

| | | [INFO] Skipping /usr/local/bin/kubectl symlink |

| | | to k3s, already exists |

| | | [INFO] Skipping /usr/local/bin/crictl symlink |

| | | to k3s, already exists |

| | | [INFO] Skipping /usr/local/bin/ctr symlink to |

| | | k3s, already exists |

| | | [INFO] Creating killall script |

| | | /usr/local/bin/k3s-killall.sh |

| | | [INFO] Creating uninstall script |

| | | /usr/local/bin/k3s-agent-uninstall.sh |

| | | [INFO] env: Creating environment file |

| | | /etc/systemd/system/k3s-agent.service.env |

| | | [INFO] systemd: Creating service file |

| | | /etc/systemd/system/k3s-agent.service |

| | | [INFO] systemd: Enabling k3s-agent unit |

| | | [INFO] systemd: Starting k3s-agent |

+------+---------+--------------------------------------------------+

INFO: Installing K3s on ['red01', 'red02', 'red03'] as agent of red

INFO: Fetching the server token

+-------+---------+--------------------------------------------------+

| host | success | stdout |

+-------+---------+--------------------------------------------------+

| red01 | True | [INFO] Finding release for channel stable |

| | | [INFO] Using v1.20.5+k3s1 as release |

| | | [INFO] Downloading hash https://github.com/k3s- |

| | | io/k3s/releases/download/v1.20.5+k3s1/sha256sum- |

| | | arm.txt |

| | | [INFO] Downloading binary https://github.com/k3 |

| | | s-io/k3s/releases/download/v1.20.5+k3s1/k3s-armh |

| | | f |

| | | [INFO] Verifying binary download |

| | | [INFO] Installing k3s to /usr/local/bin/k3s |

| | | [INFO] Creating /usr/local/bin/kubectl symlink |

| | | to k3s |

| | | [INFO] Creating /usr/local/bin/crictl symlink |

| | | to k3s |

| | | [INFO] Creating /usr/local/bin/ctr symlink to |

| | | k3s |

| | | [INFO] Creating killall script |

| | | /usr/local/bin/k3s-killall.sh |

| | | [INFO] Creating uninstall script |

| | | /usr/local/bin/k3s-agent-uninstall.sh |

| | | [INFO] env: Creating environment file |

| | | /etc/systemd/system/k3s-agent.service.env |

| | | [INFO] systemd: Creating service file |

| | | /etc/systemd/system/k3s-agent.service |

| | | [INFO] systemd: Enabling k3s-agent unit |

| | | [INFO] systemd: Starting k3s-agent |

... Some output removed for brevity

Wait a moment for k3s to set up initial containers.

Let us check that server reports the appropriate nodes as members.

We can run remote commands with the cms host ssh command as well as using

K3S specific commands.

For example to get cluster information we can run

you@your-laptop:~$ cms pi k3 cluster info red

pi k3 cluster info red

INFO: Getting cluster info for red

INFO: sudo kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

red03 Ready <none> 28s v1.20.5+k3s1 10.1.1.4 <none> Raspbian GNU/Linux 10 (buster) 5.10.17-v7l+ containerd://1.4.4-k3s1

red02 Ready <none> 30s v1.20.5+k3s1 10.1.1.3 <none> Raspbian GNU/Linux 10 (buster) 5.10.17-v7l+ containerd://1.4.4-k3s1

red01 Ready <none> 27s v1.20.5+k3s1 10.1.1.2 <none> Raspbian GNU/Linux 10 (buster) 5.10.17-v7l+ containerd://1.4.4-k3s1

red Ready control-plane,master 84s v1.20.5+k3s1 10.1.1.1 192.168.1.22 Raspbian GNU/Linux 10 (buster) 5.10.17-v7l+ containerd://1.4.4-k3s1

INFO: Server node token

K106f1caa41b133e69a69b1e3ac1da3a451393029e382be846eb0bcb7dfc7eab2db::server:2d604411ff6ab2a7c162bc4e82292690

INFO: Containers running on nodes

NODE: red03

CONTAINER IMAGE CREATED STATE NAME ATTEMPT POD ID

a24076569fa00 7d23a14d38d24 5 seconds ago Running lb-port-443 0 70adcf7269a03

3332e6b1f602d 7d23a14d38d24 6 seconds ago Running lb-port-80 0 70adcf7269a03

2f1273e3eca91 d24dd28770a36 15 seconds ago Running metrics-server 0 0ca2190dba121

NODE: red02

CONTAINER IMAGE CREATED STATE NAME ATTEMPT POD ID

be2ca6f1b77a5 7d23a14d38d24 5 seconds ago Running lb-port-443 0 fc85e830e60bd

98ae1280e7a59 7d23a14d38d24 6 seconds ago Running lb-port-80 0 fc85e830e60bd

ebcc2edeb3926 1e695755cc09d 13 seconds ago Running local-path-provisioner 0 140da1f145714

NODE: red01

CONTAINER IMAGE CREATED STATE NAME ATTEMPT POD ID

bb4a9295bc345 7d23a14d38d24 1 second ago Running lb-port-443 0 9fc4bec3faae0

6ba339136ae1a 944e5aba28f45 1 second ago Running traefik 0 87ce8f8685767

491c2d8832f8d 7d23a14d38d24 1 second ago Running lb-port-80 0 9fc4bec3faae0

d12b76e2c51e7 a0ce6ab869a69 12 seconds ago Running coredns 0 a1777698c457c

NODE: red

CONTAINER IMAGE CREATED STATE NAME ATTEMPT POD ID

afa997d47eb33 7d23a14d38d24 4 seconds ago Running lb-port-443 0 524fcbbd2b879

d0921db7d2229 7d23a14d38d24 5 seconds ago Running lb-port-80 0 524fcbbd2b879

Or we can run the commands directly with cms host ssh as demonstrated

below. This is useful for commands that may not be built into cms, or that

the user knows better than the cms shell commands.

NOTE:

cms host sshcommands go are interpreted first by bash and then by python, complex commands may not work without complex escape sequences.

you@your-laptop:~$ cms host ssh red \" sudo kubectl get nodes \"

host ssh red " sudo kubectl get nodes "

+------+---------+--------------------------------------------------+

| host | success | stdout |

+------+---------+--------------------------------------------------+

| red | True | NAME STATUS ROLES AGE |

| | | VERSION |

| | | red01 Ready <none> 15m |

| | | v1.20.5+k3s1 |

| | | red02 Ready <none> 15m |

| | | v1.20.5+k3s1 |

| | | red03 Ready <none> 15m |

| | | v1.20.5+k3s1 |

| | | red Ready control-plane,master 16m |

| | | v1.20.5+k3s1 |

+------+---------+--------------------------------------------------+

It is straightforward to create a dashboard for our newly created cluster.

If using Raspberry OS on the pis, run:

you@your-laptop:~$ cms pi k3 dashboard create red

If using Ubuntu Server on the pis, run:

you@your-laptop:~$ cms pi k3 dashboard create red --ubuntu

Both commands will automatically start the dashboard. However, on Ubuntu Server, cms pi k3 dashboard start red needs to be run when the pi is rebooted.

We can easily access the Web UI Dashboard for easy management of our cluster.

First, let us connect to the new dashboard created above.

you@your-laptop:~$ cms pi k3 dashboard connect red

We should get a green “Connection created” message. We can now check on the status of our dashboard and obtain our token to log in.

you@your-laptop:~$ cms pi k3 dashboard info

pi k3 dashboard info

INFO: Finding running dashboards...

+---------------------+-------------+------------+------------------+-------+

| Server Access Point | Remote Port | Local Port | Dashboard Status | PID |

+---------------------+-------------+------------+------------------+-------+

| red | 8001 | 8001 | Active | 99240 |

+---------------------+-------------+------------+------------------+-------+

INFO: Dashboard Link:

http://localhost:8001/api/v1/namespaces/kubernetes-dashboard/services/https:kubernetes-dashboard:/proxy/

INFO: Fetching authentication token...

# A long token will go here

Note. If

Dasboard Statussays “Up but not ready”, the k3s dashboard is still likely in the process of coming online. Wait a few moments and try the info command again.

Take note of the authentication token given. To open the dashboard, you may click on the link provided by the info command or use the following command to automaticallly open in browser.

you@your-laptop:~$ cms pi k3 dashboard

pi k3 dashboard

Opening dashboard...

When ready, you can then disconnect from your dashboard with the following:

you@your-laptop:~$ cms pi k3 dashboard disconnect

We will add a new machine red04 to be an agent of the red hosted cluster.

you@your-laptop:~$ cms pi k3 install agent red04 red

pi k3 install agent red04 red

INFO: Installing K3s on red04 as agent of red

INFO: Fetching the server token

+-------+---------+--------------------------------------------------+

| host | success | stdout |

+-------+---------+--------------------------------------------------+

| red04 | True | [INFO] Finding release for channel stable |

| | | [INFO] Using v1.20.5+k3s1 as release |

| | | [INFO] Downloading hash https://github.com/k3s- |

| | | io/k3s/releases/download/v1.20.5+k3s1/sha256sum- |

| | | arm.txt |

| | | [INFO] Downloading binary https://github.com/k3 |

| | | s-io/k3s/releases/download/v1.20.5+k3s1/k3s-armh |

| | | f |

| | | [INFO] Verifying binary download |

| | | [INFO] Installing k3s to /usr/local/bin/k3s |

| | | [INFO] Creating /usr/local/bin/kubectl symlink |

| | | to k3s |

| | | [INFO] Creating /usr/local/bin/crictl symlink |

| | | to k3s |

| | | [INFO] Creating /usr/local/bin/ctr symlink to |

| | | k3s |

| | | [INFO] Creating killall script |

| | | /usr/local/bin/k3s-killall.sh |

| | | [INFO] Creating uninstall script |

| | | /usr/local/bin/k3s-agent-uninstall.sh |

| | | [INFO] env: Creating environment file |

| | | /etc/systemd/system/k3s-agent.service.env |

| | | [INFO] systemd: Creating service file |

| | | /etc/systemd/system/k3s-agent.service |

| | | [INFO] systemd: Enabling k3s-agent unit |

| | | [INFO] systemd: Starting k3s-agent |

+-------+---------+--------------------------------------------------+

We can also easily uninstall the K3S cluster.

you@your-laptop:~$ cms pi k3 uninstall cluster red,red0[1-3]

pi k3 uninstall cluster red,red0[1-3]

INFO: Uninstalling agent install of K3s on ['red', 'red01', 'red02', 'red03', red04']

+-------+---------+--------------------------------------------------+

| host | success | stdout |

+-------+---------+--------------------------------------------------+

| red | True | /usr/bin/systemctl |

| | | Additional k3s services installed, skipping |

| | | uninstall of k3s |

| red01 | True | /usr/bin/systemctl |

| red02 | True | /usr/bin/systemctl |

| red03 | True | /usr/bin/systemctl |

| red04 | True | /usr/bin/systemctl |

+-------+---------+--------------------------------------------------+

INFO: Uninstalling server install of K3s on red

+------+---------+--------------------+

| host | success | stdout |

+------+---------+--------------------+

| red | True | /usr/bin/systemctl |

+------+---------+--------------------+

Now we will create a new standalone K3s server on red05.

you@your-laptop:~$ cms pi k3 install server red05

pi k3 install server red05

INFO: Installing K3s as stand-alone server on ['red05']

+-------+---------+--------------------------------------------------+

| host | success | stdout |

+-------+---------+--------------------------------------------------+

| red05 | True | [INFO] Finding release for channel stable |

| | | [INFO] Using v1.20.5+k3s1 as release |

| | | [INFO] Downloading hash https://github.com/k3s- |

| | | io/k3s/releases/download/v1.20.5+k3s1/sha256sum- |

| | | arm.txt |

| | | [INFO] Downloading binary https://github.com/k3 |

| | | s-io/k3s/releases/download/v1.20.5+k3s1/k3s-armh |

| | | f |

| | | [INFO] Verifying binary download |

| | | [INFO] Installing k3s to /usr/local/bin/k3s |

| | | [INFO] Creating /usr/local/bin/kubectl symlink |

| | | to k3s |

| | | [INFO] Creating /usr/local/bin/crictl symlink |

| | | to k3s |

| | | [INFO] Creating /usr/local/bin/ctr symlink to |

| | | k3s |

| | | [INFO] Creating killall script |